Untitled

Posted on

test

测试信息打印

默认情况下,pytest 会捕获所有的输出。这意味着在测试运行期间,

print语句的输出不会显示在控制台上。如果想看到

print语句的输出,需要使用-s选项来告诉 pytest 不要捕获输出1

pytest -s test_web_base.py

测试指定方法

1

pytest tests/unit_tests/document_loaders/test_web_base.py::类名::方法名

测试被标记的方法

1

2

3@pytest.mark.finished

def test_func1():

assert 1 == 11

pytest -m finished tests/test-function/test_with_mark.py

python fire

Posted on

In

python

github copilot

Posted on

In

github

GPU learn

Posted on

In

GPU

python pydantic

model_validator

model_validator是 Pydantic 库提供的一个装饰器,用于定义模型验证器函数。模型验证器函数是一种特殊的方法,用于在创建模型实例或更新模型属性时执行自定义的验证逻辑。

1 | @model_validator(mode="after") |

或

1 | @model_validator(mode="before") |

llm agent

Agent = Large Language Model (LLM) + Observation + Thought + Action + Memory

MultiAgent = Agents + Environment + Standard Operating Procedure (SOP) + Communication + Economy

ReAct1(reason, act) 是一种使用自然语言推理解决复杂任务的语言模型范例,ReAct旨在用于允许LLM执行某些操作的任务

reference

raycast awesome

keyboard

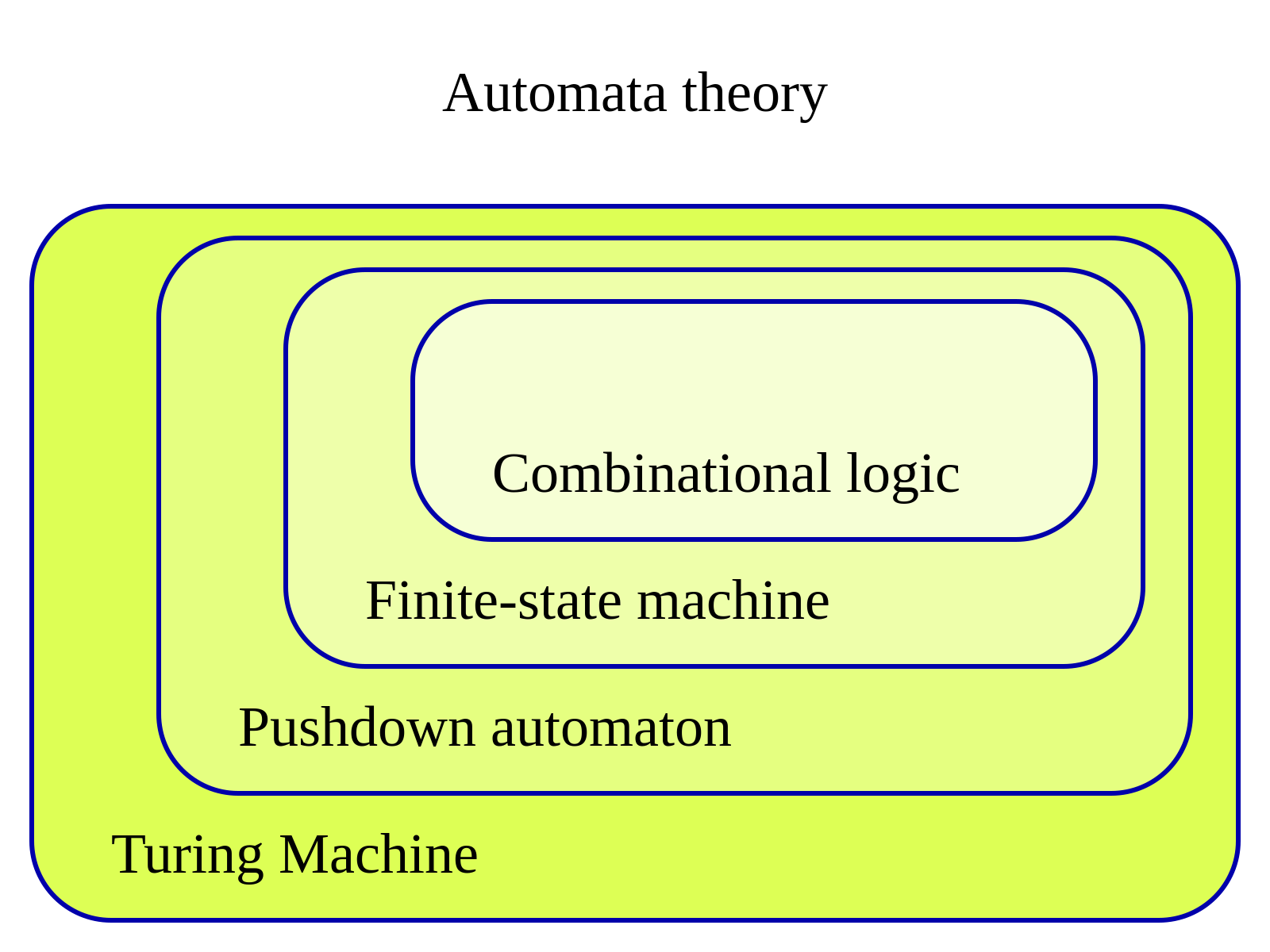

finite state machine

Posted on

In

fsm

The FSM can change from one state to another in response to some inputs; the change from one state to another is called a transition.

reference